« AI Concept » : différence entre les versions

mAucun résumé des modifications |

Aucun résumé des modifications |

||

| Ligne 13 : | Ligne 13 : | ||

* Ollama (+ il y a des trucs qui permettent d'améliorer les perfs des modèles) | * Ollama (+ il y a des trucs qui permettent d'améliorer les perfs des modèles) | ||

* MCPO // OpenWebUI | * MCPO // OpenWebUI | ||

* Machine Virtuelle chez Exo bien | * Machine Virtuelle chez Exo bien booostée | ||

= Glossary = | = Glossary = | ||

Version du 9 novembre 2025 à 22:12

https://learn.microsoft.com/en-us/azure/azure-functions/functions-bindings-http-webhook-trigger?tabs=python-v2%2Cisolated-process%2Cnodejs-v4%2Cfunctionsv2&pivots=programming-language-csharp

https://learn.microsoft.com/en-us/azure/azure-functions/functions-networking-options?tabs=azure-portal

https://komodor.com/blog/aiops-for-kubernetes-or-kaiops/

https://learn.microsoft.com/en-us/azure/architecture/ai-ml/guide/ai-agent-design-patterns

https://learn.microsoft.com/en-us/azure/architecture/ai-ml/architecture/baseline-azure-ai-foundry-chat

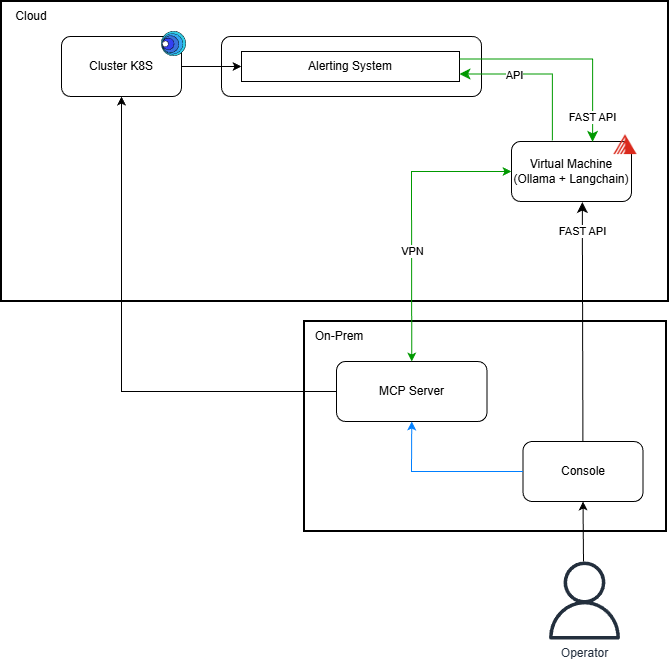

Technologies :

- K8SGPT

- LangChain (+ LangFuse pour monitoring?)

- Ollama (+ il y a des trucs qui permettent d'améliorer les perfs des modèles)

- MCPO // OpenWebUI

- Machine Virtuelle chez Exo bien booostée

Glossary

Large Language Model (LLM)

A Large Language Model (LLM) is the engine behind an AI application such as ChatGPT. In this case, the engine powering ChatGPT is GPT-4 (or GPT-4o, previously), which is the LLM used by the application.

Azure AI Foundry is a service that allows you to choose which Large Language Model (LLM) you want to use.

Model Context Protocol (MCP)

A Model Context Protocol (MCP) is a protocol that standardizes communication between Large Language Models (LLMs) and external systems , such as ITSM tools (like ServiceNow), Kubernetes clusters, and more.

You can use an MCP client , for example, Continue.dev in your IDE (like VS Code) and then configure MCP servers, such as your Kubernetes cluster, to enable your LLM to interact with these systems.

Retrieval-Augmented Generation (RAG)

Technology Stack

LangChain

LangChain is an application framework that helps structure your prompts using PromptTemplate. For example, with an alerting system, when the AI is queried, you can create a template that guides it to follow a consistent debugging structure in its responses :

from langchain import PromptTemplate

prompt = PromptTemplate(

input_variables=["alert", "logs", "metrics"],

template="""

Tu es un expert Kubernetes.

Un incident a été détecté :

{alert}

Voici les logs du pod :

{logs}

Voici ses métriques :

{metrics}

Analyse les causes probables et propose des actions correctives précises.

"""

)

K8sGPT

K8sGPT is an open-source tool that scans Kubernetes clusters, detects issues, and uses a Large Language Model (LLM) such as Azure OpenAI to explain problems and suggest solutions in natural language.

Ollama

Ollama is an open-source tool that lets you download and run large language models (LLMs) like Llama 3 or Mistral locally, allowing you to use AI without relying on the cloud.