Kubernetes - Container orchestration

Introduction

Kubernetes (or K8s) is an open-source system used for container orchestration. Indeed, it complements container applications such as Docker. It permits the consistent deployment and management of containers. Kubernetes was developed by Google and later donated to the Cloud Native Computing Foundation. The first version was released in July 2015.

Compatibility

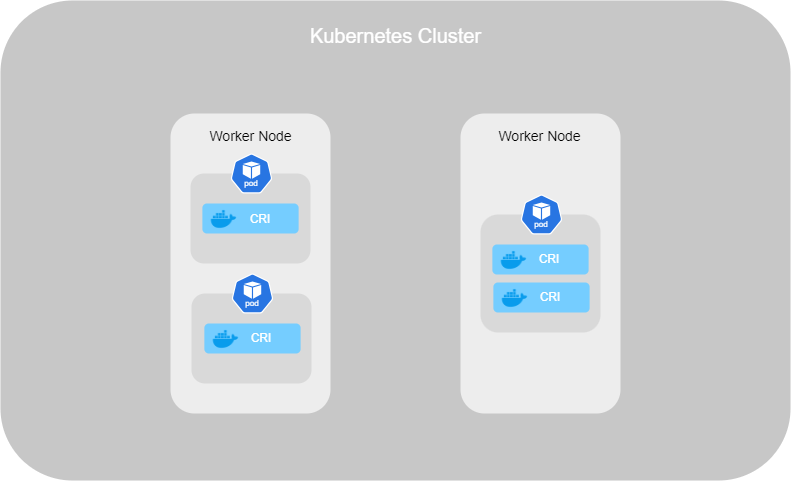

At the beginning, Kubernetes was only compatible with Docker. Over time, Kubernetes became able to work with other container applications such as rkt. To accomplish this, they introduced an interface called the Container Runtime Interface (CRI). It allows any application to work with any container application as long as they adhere to the Open Container Initiative (OCI) standards.

The Open Container Initiative (OCI) consists of an imagespec and a runtimespec :

- imagespec : It defines the specifications on how an image should be built.

- runtimespec : It defines the standards for how any container runtime should be developed.

However, Docker is not compatible with Container Runetime Interface because he has been developed before the Open Container Initative standars. So, Kubernetes had to adapted their application for the CRI and Docker as well. To be able to support, they have set up dockershim.

A few years later, after an update, Kubernetes stopped support dockershim, and Docker was not supported anymore by Kubernetes for the runtime, but only for the imagespec because it followed the standard of OCI. Nowadays, a group of developers has created cri-dockerd, a runtime that is compatible with Kubernetes.

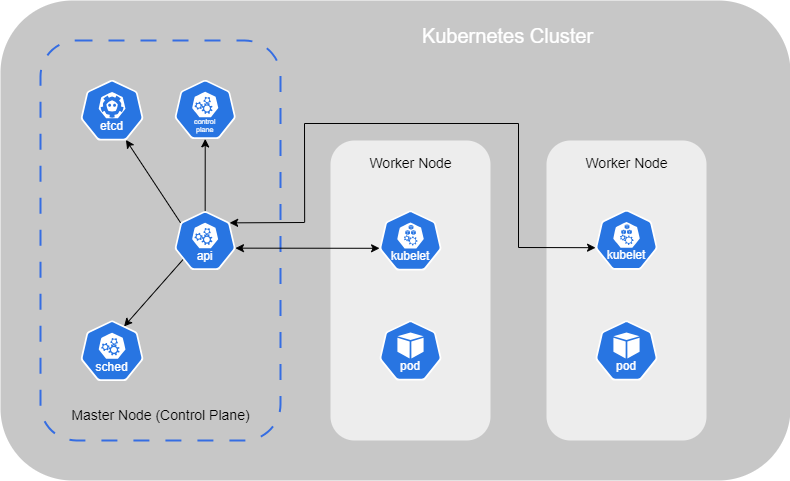

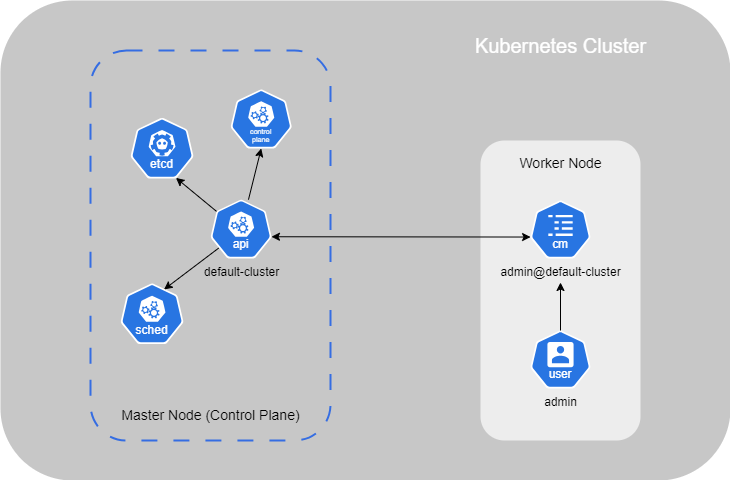

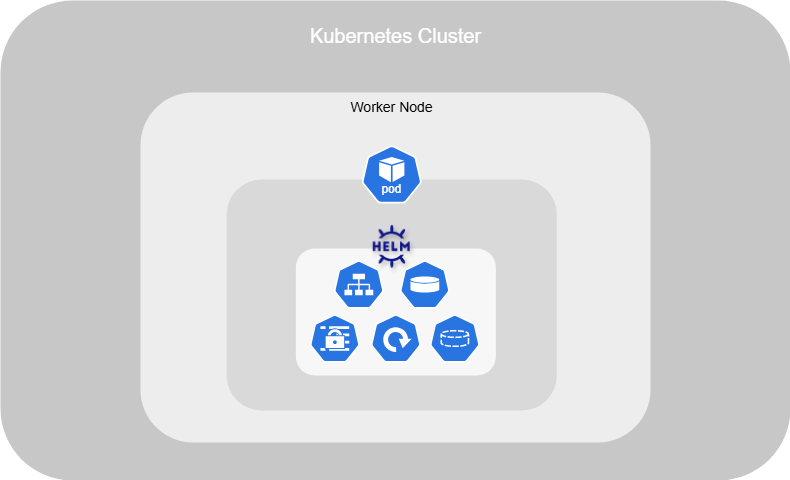

Architecture

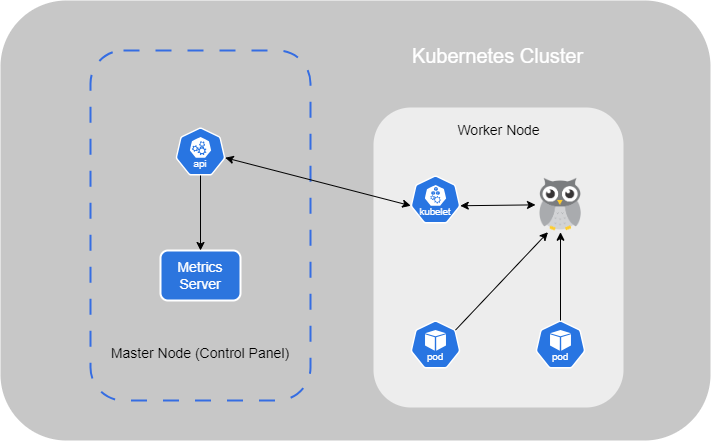

The first thing we find in the architecture of container orchestration is the Worker Node (prevously named Minions). A Worker Node can be a physical or virtual machine where containers are run by Kubernetes.

You should have multiple Worker Nodes to use Kubernetes properly. Indeed, if one Worker Node fails, the others are still available to take over and keep the application running. This setup is called a cluster.

The Master Node should be set up to move the workload and watches over the Worker Nodes in the cluster and is responsible for container orchestration.

Kubernetes Master and Worker Node is composed of multiple components that are automatically installed when you set up it on your system :

- API Server : This acts as the front end for Kubernetes, managing and interacting with Nodes in the cluster ;

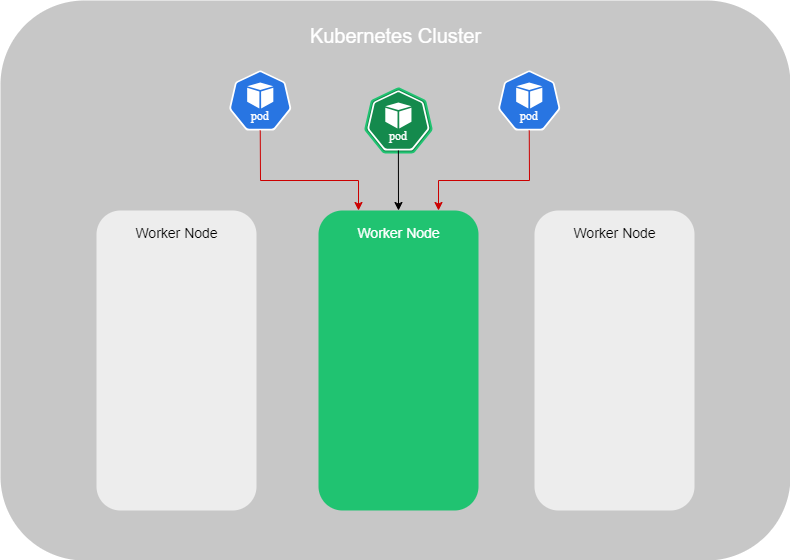

- Scheduler : It is responsible for distributing the workload and containers across the multiple Worker Nodes in the cluster. When a new container is created, it automatically assigns it to a Node.

- Controller : It acts as the brain of this orchestration. Indeed, it notices and responds when Worker Nodes or containers go down. It makes decisions about when new containers need to be brought up.

- etcd : It is a key-value store that holds all the information about the Worker Nodes and Masters in the cluster ;

- Pod : This is explained in another section ;

- Kubelet : It is the agent running on each Worker Node in the cluster. It ensures that the containers are running as expected on the Node.

In this setup, the Node having the Kube-apiserver is designated as the cluster Master. Other Worker Nodes, with the Kubelet agent, communicate with the master to share data and execute tasks upon request.

Tools and Distributions

This section will be completed soon

- Kubectl : Kubectl is the command-line interface used to interact with a Kubernetes cluster. It allows users to create, modify, retrieve information about, or delete resources.

- Kubeadm : Kubeadm is a tool designed to simplify the installation and configuration of a Kubernetes cluster. It allows you to designate which machines will function as the Master Node and which will serve as Worker Nodes

- Minikube : similar to Kubeadm but is intended for small-scale configurations. For example, if you want to run both the Master and the Worker on the same machine, Minikube is the appropriate tool to use.

- K3s

Debugging Containers Tool (circtl)

Kubernetes has its own command line tool named crictl, which is used to interact with container runtimes.

It is not used for deploying or managing but more for debugging container runtimes. Indeed, it works across different runtimes that are CRI compatible. The commands are quite similar to Docker.

- To pull an image, run the following command :

marijan$ crictl pull [IMAGE]

- To list existing images, execute :

marijan$ crictl images

- To get existing containers, run :

marijan$ crictl ps -a

- To execute a command inside a container, run :

marijan$ crictl exec -i -t [CONTAINER ID] [CMD]

- To view the logs :

marijan$ crictl logs [CONTAINER ID]

- To list pods :

marijan$ crictl pods

- To get low-level information on a container, image, or task, you can run the following command:

marijan$ crictl inspect [CONTAINER ID]

- To display a live stream of containers resource usage statistics, run:

marijan$ crictl stats [CONTAINER ID]

- To show the runtime version information :

marijan$ crictl version

Vanilla Cluster Deployment (Linux)

In this section, I'll detail the deployment of my Kubernetes Cluster on a system Linux, including all the information regarding the tools and resources employed.

Prerequisites

Before setting up a Kubernetes cluster, several prerequisites must be done :

- Machines : If want to use Kubeadm, a minimum of two machines is necessary. Alternatively, Minikube can be used for single-machine setups.

- Operating system : The machines must run on an up-to-date operating system to ensure compatibility and security.

- Resources : Each machine should have at least 2 GB of RAM and 2 CPUs allocated to handle Kubernetes workloads effectively.

- Network connectivity : The machines must be able to communicate easily with each other to enable cluster communication and coordination.

In my setup, I've established two virtual machines running on Debian 11. To manage networking, I've implemented a firewall with configured rules to facilitate communication and provide access to these machines.

Installation

The installation process is the same for the Master and the Node machines.

Container Runtime (cri-dockerd)

Firstly, to run containers within Pods, you need a container runtime compatible with CRI. In our case, we'll use Docker Engine as the container runtime.

1. To install Docker Engine via the apt repository, you need to configure Docker's apt repository. First, Add Docker's official GPG key :

marijan$ apt-get install ca-certificates curl marijan$ install -m 0755 -d /etc/apt/keyrings marijan$ curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc marijan$ chmod a+r /etc/apt/keyrings/docker.asc

2. Then, add the repository to Apt sources and update the system :

echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \ $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/null marijan$ apt-get update

2. Next, install Docker packages by executing the following command :

marijan$ apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

3. Lastly, to verify if everything works well, run an image :

marijan$ docker run hello-world

As explained in the compatibility section, Docker Engine is no longer supported by Kubernetes. To make it compatible, you must install cri-dockerd. However, to install cri-dockerd, you need to install Go first, as cri-dockerd is developed using this programming language.

1. To install Go, you need to download it from the official website :

marijan$ wget https://go.dev/dl/go1.22.4.linux-amd64.tar.gz

2. Once it is downloaded, you need to extract the file from the archive :

marijan$ tar -xzf go1.22.4.linux-amd64.tar.gz -C /usr/share/

3. Then, add Go to the PATH environment variable :

marijan$ export PATH=$PATH:/usr/local/go/bin

4. Finally, verify if everything works correctly by running the following command :

marijan$ go version go version go1.22.4 linux/amd64

Now that we've installed Go, we can set up cri-dockerd.

1. First, you need to install Git Tools if it's not already installed :

marijan$ apt-get install git-all -y

2. Then, clone the repository from GitHub :

marijan$ git clone https://github.com/Mirantis/cri-dockerd.git

3. Once cloned, create a bin folder and build CRI using Go :

marijan$ cd cri-dockerd marijan:~/cri-dockerd$ mkdir bin marijan:~/cri-dockerd$ go build -o bin/cri-dockerd

4. Create another bin folder under the directory /usr/local :

marijan$ mkdir /usr/local/bin/cri-dockerd

5. Install cri-dockerd by executing the following commands :

marijan:~/cri-dockerd/bin$ install -o root -g root -m 0755 cri-dockerd /usr/local/bin marijan:~/cri-dockerd$ install packaging/systemd/* /etc/systemd/system marijan:~/cri-dockerd$ sed -i -e 's,/usr/bin/cri-dockerd,/usr/local/bin/cri-dockerd,' /etc/systemd/system/cri-docker.service

6. Finally, reload the daemon and enable the plugin :

marijan$ systemctl daemon-reload marijan$ systemctl enable --now cri-docker.socket

6. You can ensure everything works well by running :

marijan$ systemctl status cri-docker.socket

Kubeadm, Kubelet and Kubeclt

Now that our container runtime has been set up, we can install all the tools needed to configure and manage our Kubernetes cluster.

1. First, install the packages needed to use the Kubernetes apt repository :

marijan$ apt-get install -y apt-transport-https ca-certificates curl gpg

2. Then, download the public signing key for Kubernetes package repositories :

marijan$ curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

3. Add the appropriate Kubernetes apt repository :

marijan$ echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

4. Update the apt package index then install and pin kubelet, kubeadm, and kubectl ::

marijan$ apt-get update marijan$ apt-get install -y kubelet kubeadm kubectl marijan$ apt-mark hold kubelet kubeadm kubectl

5. Enable the kubelet service before running kubeadm :

marijan$ systemctl enable --now kubelet

6. Finally, you have to disable the swap on the system. For Debian, comment the line about the swap in the folder /etc/fstab and reboot your system :

marijan$ vim /etc/fstab # swap was on /dev/sda5 during installation # UUID=e5c9c68a-3429-49b9-8747-b3af73000bc5 none swap sw 0 0 # /dev/sr0 /media/cdrom0 udf,iso9660 user,noauto 0 0 marijan$ reboot

Configuration

The configuration process is different between the Master and the Node machines.

Setting up the Network (Calico)

To set up a network in your Kubernetes cluster, there are multiple add-ons available, each with different functionalities. This information can be found here. In our case, we will use Calico.

This section specifically for the Master. Calico should not be configured on Node machines.

1. Run the following command to initiate your pods network with the CIDR required by Calico. Specify the CRI socket as well, which is cri-dockerd in this case :

marijan$ kubeadm init --pod-network-cidr=192.168.0.0/16 --cri-socket=unix:///var/run/cri-dockerd.sock

2. Now that it has been initiated, configure kubectl :

marijan$ mkdir -p $HOME/.kube marijan$ cp -i /etc/kubernetes/admin.conf $HOME/.kube/config marijan$ chown $(id -u):$(id -g) $HOME/.kube/config

3. Then, download the Calico networking manifest :

marijan$ curl https://raw.githubusercontent.com/projectcalico/calico/v3.28.0/manifests/calico.yaml -O

4. Apply the manifest once it is downloaded :

marijan$ kubectl apply -f calico.yaml

5. Finally, check if Calico status is set to Running :

marijan$ kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-564985c589-qf9cs 1/1 Running 0 21m kube-system calico-node-qtvz2 1/1 Running 0 21m kube-system coredns-7db6d8ff4d-6m78v 1/1 Running 0 94m kube-system coredns-7db6d8ff4d-bzxk9 1/1 Running 0 94m kube-system etcd-deb-vm-01 1/1 Running 0 94m kube-system kube-apiserver-deb-vm-01 1/1 Running 0 94m kube-system kube-controller-manager-deb-vm-01 1/1 Running 0 94m kube-system kube-proxy-k96tw 1/1 Running 0 94m kube-system kube-scheduler-deb-vm-01 1/1 Running 0 94m

Connecting Nodes

Once the network is set up, you can connect your Nodes to the cluster.

1. Firstly, you need to generate a token and the public key on the Master :

marijan$ kubeadm token create 5didvk.d09sbcov8ph2amjw marijan$ openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \ > openssl dgst -sha256 -hex | sed 's/^.* //' 8cb2de97839780a412b93877f8507ad6c94f73add17d5d7058e91741c9d5ec78

2. Then, execute the join command on the Node machine:

marijan$ kubeadm join --token 5didvk.d09sbcov8ph2amjw 172.16.0.10:6443 --discovery-token-ca-cert-hash sha256:8cb2de97839780a412b93877f8507ad6c94f73add17d5d7058e91741c9d5ec78 --cri-socket=unix:///var/run/cri-dockerd.sock

- --token : token generate before

- HOST:PORT : (6443 is the default port for kubeadmin)

- --discovery-token-ca-cert-hash sha256: : public key generate before

- --cri-socket : Container Runtime used

Enable auto-completion kubectl commands (bash)

If you want to enable auto-completion for all kubectl commands in your shell (bash), or use the shortcut k to run kubectl commands, execute the following command :

marijan$ source <(kubectl completion bash) && alias k=kubectl && complete -F __start_kubectl k

The source command enables auto-completion for all kubectl commands in your current session. The alias command defines k as a shortcut for kubectl, so you can type k instead of kubectl. The complete command ensures that the alias k benefits from the same auto-completion as kubectl.

Once this is done, you need to reload your shell configuration to make it permanent:

marijan$ source ~/.bashrc

Bugs

Here is a list of bugs you might encounter.

Connection refused

After booting my virtual machine where Kubernetes was running, I got the following error :

E0624 15:01:00.267236 2317 memcache.go:265] couldn't get current server API group list: Get "https://172.16.0.10:6443/api?timeout=32s": dial tcp 172.16.0.10:6443: connect: connection refused E0624 15:01:00.267541 2317 memcache.go:265] couldn't get current server API group list: Get "https://172.16.0.10:6443/api?timeout=32s": dial tcp 172.16.0.10:6443: connect: connection refused E0624 15:01:00.269180 2317 memcache.go:265] couldn't get current server API group list: Get "https://172.16.0.10:6443/api?timeout=32s": dial tcp 172.16.0.10:6443: connect: connection refused E0624 15:01:00.269548 2317 memcache.go:265] couldn't get current server API group list: Get "https://172.16.0.10:6443/api?timeout=32s": dial tcp 172.16.0.10:6443: connect: connection refused E0624 15:01:00.271094 2317 memcache.go:265] couldn't get current server API group list: Get "https://172.16.0.10:6443/api?timeout=32s": dial tcp 172.16.0.10:6443: connect: connection refused

I fixed this by restarting the kubelet service. Ensure that kubelet and your Container Engine are running properly.

If it doesn’t work, verify that the configuration file is set up correctly on the worker node :

marijan$ kubectl config view

apiVersion: v1

clusters: null

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

If it’s not configured correctly, check if the kubelet.conf file is set up (if not, refer back to the step on Connecting Nodes) and execute the following command :

mkdir -p ~/.kube sudo cp /etc/kubernetes/admin.conf ~/.kube/config sudo chown $(id -u):$(id -g) ~/.kube/config

Manifest file (YAML)

Here are two ways to create an object in Kubernetes :

- Imperative : Create an object through a command ;

- Declarative : Create an object through a manifest file.

Manifest or configuration file is developed with Yet Another Markup Language (YAML) files are used as input for the creation of objects such as Pods, Deployments, ReplicaSets, and more.

In Kubernetes, a .yml file begins with these four main sections, which are required in the configuration file :

marijan$ cat yaml-definition.yml

apiVersion: <version-object>

kind: <object>

metadata:

spec:

Here are the four main sections detailed and explained :

- apiVersion : This specifies the version of the Kubernetes API to use. Each type of object has its own version, which could be different from others.

- kind : This specifies the type of object you are going to create.

- metadata : This section contains data about the object being deployed, such as names, labels, and application-specific information. All the data specified under metadata should be written as a dictionary. They will be the children of the metadata section, so proper indentation is required.

- spec : This section specifies the desired state of the object, such as the containers in a Pod. Similar to metadata, the data here is written as a dictionary. The dash "-" indicates the beginning of the first item in a list.

Once the file is ready to be deployed, you can run the following command to create the YAML configuration and create the resources in Kubernetes. Instead of using the -f option, you can directly specify the type of object you want to run :

marijan$ kubectl create -f yaml-definition.yml

If you have a running object and you don't have the .yml file, you can extract it to make modifications :

marijan$ kubectl get <object> <name> -o yaml <file>.yml

You can as well create a yaml file (or other, as JSON) by running a command to create for example a pod without have to set up the .yml file :

marijan$ kubectl run <pod> --image=<image> -o yaml > <file.yml>

To perform a scaling of your object without having to shut down pods, you can run the following command:

marijan$ kubectl replace -f <name>.yml

It is possible to modify a specific part of the .yml file, for example, to increase the number of replicas :

marijan$ kubectl scale --<parameter>=<value> -f <name>.yml

You can also edit a running .yml file using the following command:

marijan$ kubectl edit <object> <name>

If you want to simulate running a pod without actually executing it, you can add the parameter --dry-run. It will run the command and show the result without actually performing the operation :

marijan$ kubectl run nginx --image=nginx --dry-run

Commands and Arguments

It's possible to configure commands in a pod by defining the command we want to execute and its arguments under the containers section.

For instance, in the example below, we have defined the command printenv with the argument HOSTNAME, KUBERNETES_PORT, which means the pod will show the hostname and the port used.

marijan$ cat cmd_args_definition.yml

apiVersion: v1

kind: Pod

metadata:

name: cmd-arg-demo

labels:

app: cmd-arg-demo

spec:

containers:

- name: cmd-arg-demo-container

image: debian

command: ["printenv"]

args: ["HOSTNAME", "KUBERNETES_PORT"]

In Docker, within a Dockerfile, the command is represented by ENTRYPOINT and the argument by CMD.

Environnement Variables

Environnement Variables are used to store values by a specific name defined. In Kubernetes, there is three main type of environnement variable ; Plain Key Value, ConfigMap and Secret.

Plain Key Value

Plain Key-Value is the basic configuration for setting up an environment variable. You simply define the name of the variable, followed by its value. Here is an example below :

env

- name: FULLNAME

value: Marijan Stajic

Each environment variable is listed as an item, and any item under the environment starts with a dash "-".

ConfigMap

If you have many pods that are using the same variables, or you just have a lot of variables and you would like to reduce your code, you can create a ConfigMap file.

ConfigMaps are used to store data as key-value pairs in another file and are divided into two parts : creating a ConfigMap and injecting it into a Pod.

As with any Kubernetes object, there are two ways to create a ConfigMap. To deploy it imperatively, you can simply create the file by specifying the key and the value.

marijan$ kubectl create configmap <config-name> --from-literal=<key>=<value>

However, this method could be complicated once you have multiple variables to add.

You can also create it by importing the configuration from a properties file:

marijan$ kubectl create configmap <config-name> --from-file=<path-to-file>

The second way to deploy a ConfigMap is, obviously, by the declarative way, which involves setting up a .yml file. Inside, as with any object, you need to specify the apiVersion, kind, and define the name under the metadata. Create an item data and add the key-value pairs for each variable, then create it.

marijan$ cat configmap-definition.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: <name>

data:

<key1>: <value>

<key2>: <value>

Once is created, you can get all of configmap running on the machine :

marijan$ kubectl get configmaps NAME DATA AGE <name> 1 20d

Now, to inject an environment variable into your pod, add the following property under the containers section :

envFrom:

- configMapRef:

name: <name>

The name specified is related to the name under the metadata of your ConfigMap file. To connect the ConfigMap to your Pod, you must set the name accordingly.

There are different ways to inject ConfigMap into Pods, for instance, by using single environment variables or volumes.

Single environment variables are used to add only specific values from the ConfigMap file. The item name changes slightly :

env:

- name: <variable/key>

valueFrom:

configMapKeyRef:

name: <name>

key: <variable/key>

Here, the only things that change are envFrom, which is replaced by valueFrom, and in configMapKeyRef, you need to add key. Then, under it, you specify the name of the ConfigMap and the key you are trying to access.

Secret

The secret environment variable is used to store sensitive values such as credentials.

When setting up a web server connected to a database, for example, where you need to specify login credentials, storing them in plain text is not secure because the information is exposed.

Instead of writing credentials in a plain key-value pair or a ConfigMap file, it's advisable to use a Secret file where values are stored securely.

The process of setting up a Secret file is similar to that of a ConfigMap. The creation steps are exactly the same, except for the encoding part.

Before storing credentials in your .yml file (or in your command line if you are creating a Secret file imperatively), you should encode them in base64. To do this, execute the following command:

marijan$ echo -n "<value>" | base64 <base64-encoded-value>

The command will output the encoding value. Then, you just need to add it to your .yml file or command.

In your .yml file, don't forget to specify the apiVersion, kind, and name of your Secret :

apiVersion: v1

kind: Secret

metadata:

name: <name>

data:

<key>: <base64-encoded-value>

And in your command, specify that you are creating a Secret and generic file :

marijan$ kubectl create secret generic <secret-name> --from-literal=<key>=<value>

Ensure to replace <value> with the actual sensitive information you want to encode and <name> with the desired name of your Secret.

It's possible to decoded data. To do it, just run the following command :

marijan$ echo -n "<base64-encoded-value>" | base64 --decode <base64-decoded-value>

Now, to inject it into Pods, the process is quite similar to using a ConfigMap. Add the following information under the containers section of your Pod configuration :

envFrom:

- secretRef:

name: <name>

As with ConfigMaps, the name specified here corresponds to the name under the metadata of your Secret file. This connection is essential to link the Secret to your Pod correctly.

You can configure a Pod to use specific values from a Secret file or use the entire Secret as volumes. To configure a specific value, you need to adjust the following information :

env:

- name: <variable/key>

valueFrom:

secretKeyRef:

name: <name>

key: <variable/key>

Here, the only changes are replacing envFrom with valueFrom, and in secretKeyRef, adding key. Under secretKeyRef, specify the name of the Secret and the specific key you want to access.

For volumes, add a section named volumes and specify secretName, which is the name of your Secret file :

volumes:

- name: <volume>

secret:

secretName: <name>

Objects (Resources)

This section is aobut the Objects in Kubernetes used to deploy, manage, and scale applications in the cluster.

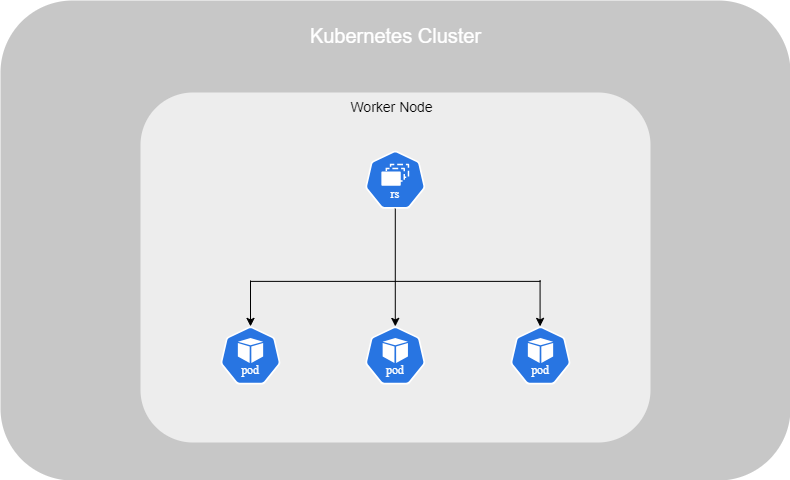

Replications

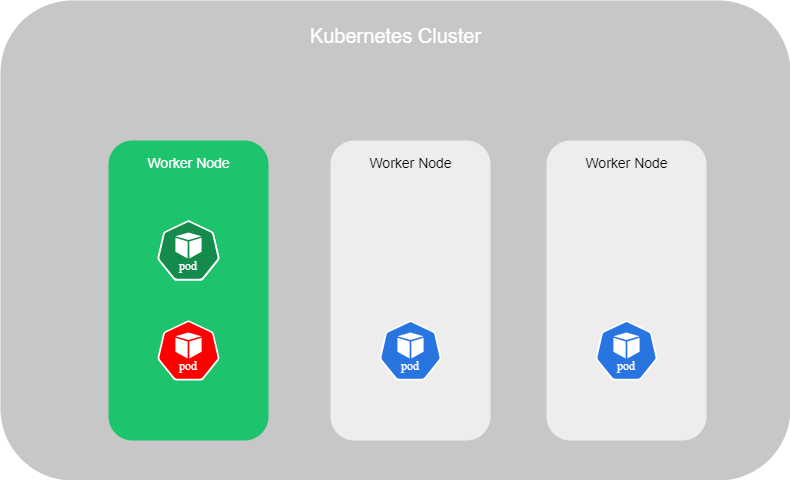

A Replica Set ensures high availability of Pods in our cluster. It manages the replacement of failed Pods, load-balancing, and scaling in response to an increase in the number of users.

You can set up a Replica Set in different ways. First, if you have a single node with one Pod and that Pod fails, it will automatically be replaced.

Another possibility, as mentioned earlier, is that if the number of users increases, the Replica Set can manage load-balancing and scaling by duplicating the Pod or creating identical Pods on other nodes.

This setup is not only for load-balancing and scaling but also ensures high availability by replacing failed Pods.

The Replica Set has a predecessor called the Replication Controller. The Replication Controller is older and has been replaced by the Replica Set because it lacks the flexibility that the Replica Set offers.

To set up Replica Set, you have to implant that on your .yml file :

marijan$ cat replicaset-definition.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp-ReplicaSet

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

selector:

matchLabels:

type: front-end

As we done in the previous section about YAML, the four main sections are present: apiVersion, kind, metadata, and spec. In this section, we will change the apiVersion and kind. Instead of deploying a Pod, we will deploy a ReplicaSet.

We will add a template section, where we will provide the content of our Pod, including its name, labels, and containers in the spec section. Additionally, we will add replicas to specify the number of replicas we want to have.

Furthermore, we will add a selector. This is a major difference between a ReplicationController and a ReplicaSet, as the previous one does not provide this option.

The selector is used to avoid duplicating an existing Pod. Here is a scenario :

1. You already have a running Nginx pod.

marijan$ kubectl get pods NAME READY STATUS RESTARTS AGE nginx-pod 1/1 Running 0 2m43s

2. You then set up a ReplicationController file without a selector because this option is not available in it. Inside this file, you have the exact same pod configuration as the existing and running Pod. If you start this ReplicationController, it will run the pod without checking if it already exists. If the original Nginx Pod crashes, it will not be recreated because another Pod was already created :

marijan$ cat replicationcontroller-definition.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myapp-ReplicationController

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: nginx-pod

labels:

app: nginx

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

marijan$ kubectl create -f replicationcontroller-definition.yml

replicationcontroller.apps/myapp-ReplicationController created

marijan$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-pod 1/1 Running 0 2m43s

myapp-ReplicationController-4j8wk 1/1 Running 0 2m43s

3. With the selector included in a ReplicaSet, when you execute the YAML file, it will check if a pod with this label already exists. If it does, it will complete the number of Pods if any are missing, and if not, it will maintain the already existing ones.

marijan$ cat replicaset-definition.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp-ReplicaSet

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

selector:

matchLabels:

type: front-end

marijan$ kubectl create -f replicaset-definition.yml

replicaset.apps/myapp-replicaset created

marijan$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-pod 1/1 Running 0 2m43s

Deployments

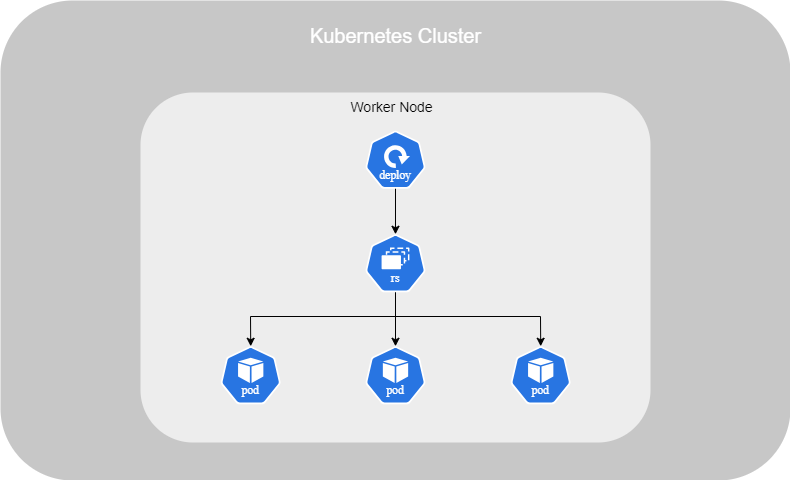

The advantage of setting up a Deployment file on Kubernetes is that it automates various processes such as deployment, upgrading instances, undoing recent changes, and making multiple changes to the application.

To fully benefit from using a Deployment, you should have several Pods running. Because to avoid to interruput the service, if you have multiple Pods running, the Deployment will do it one by one.

In fact, within a Deployment, you will find a ReplicaSet, which is responsible for running all the containers and ensure if one of them crash. The Deployment sits above the ReplicaSet to automate various processes listed before.

Just like with a ReplicaSet, you need to set up a .yml file for a Deployment. The sections are exactly the same as those in a ReplicaSet, except for the kind :

marijan$ cat deployment-definition.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 3

selector:

matchLabels:

type: front-end

To create a Deployment or any other object such as a ReplicaSet or Pod, you don't necessarily need to set up a .yml file. In fact, you can do it by running a single command.

marijan$ kubectl create deployment myapp-deployment --image=busybox --replicas=3

Here, I have created a Deployment named myapp-deployment using the image busybox and set it to have 3 replicas. And to list all of object in same time, execute the following command :

marijan$ kubectl get all NAME READY STATUS RESTARTS AGE pod/myapp-deployment-7969484b57-5c2cr 0/1 Pending 0 13s pod/myapp-deployment-7969484b57-fjlh6 0/1 Pending 0 13s pod/myapp-deployment-7969484b57-tfj4n 0/1 Pending 0 13s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d1h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/myapp-deployment 0/3 3 0 13s NAME DESIRED CURRENT READY AGE replicaset.apps/myapp-deployment-7969484b57 3 3 0 14s

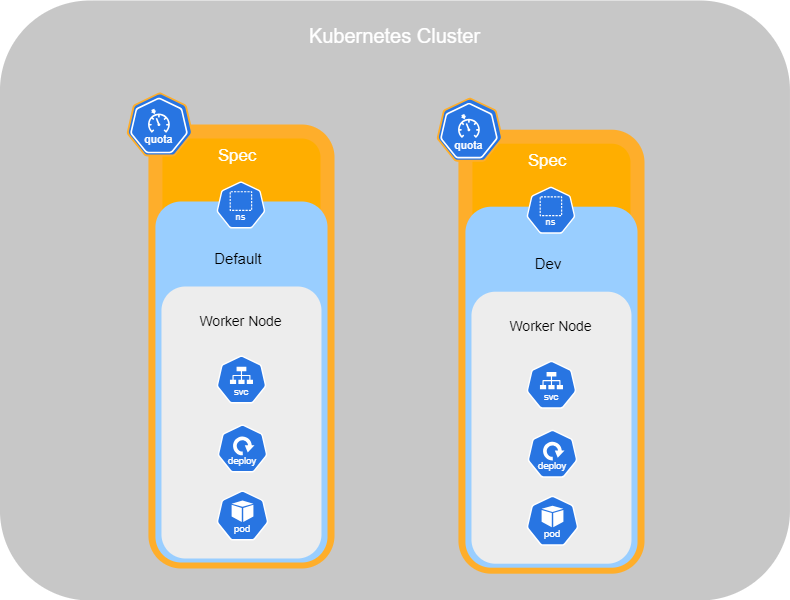

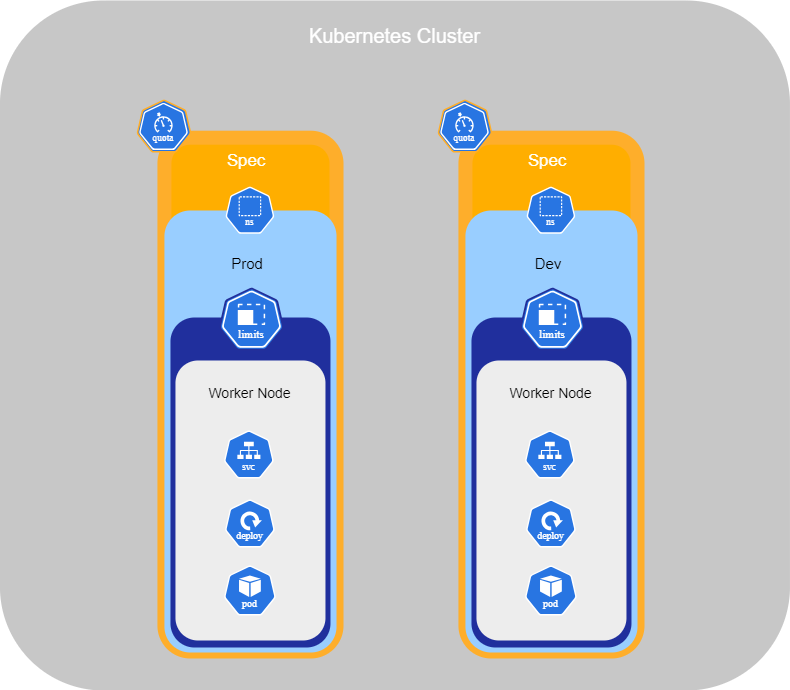

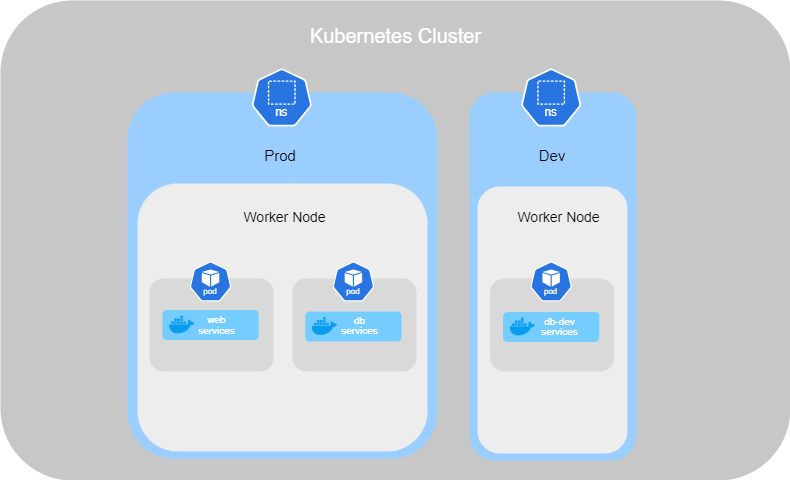

Namespaces

Namespaces in Kubernetes are like houses where services run inside. By default, when you set up services in your cluster, they are all created in the default namespace, which is called Default. This is sufficient when using Kubernetes for testing or when working alone.

Kubernetes also creates the namespaces kube-system and kube-public by default. kube-system is a required namespace used by services such as networking, DNS, and more. kube-public is a namespace used to make resources available to all users, similar to a public folder.

However, when you have a large enterprise with different departments needing containers, you can create new namespaces in order to separate resources. Separating resources helps prevent accidental deletion or modification of services.

Each namespace can have its own policies to define what actions users can perform. You can also assign resource quotas for each of them. For example, you can create a namespace called Dev for your developers and Prod for the production containers. Then, we can restrict access for certain individuals to the production environment and allocate limited resources for the Dev environment

As with any object in Kubernetes, you can set up a namespace through a .yml filen:

marijan$ cat namespace-definition.yml

apiVersion: v1

kind: Namespace

metadata:

name: dev

By default, the namespace used is Default. When you run a Pod, it will automatically be created in the default namespace. However, you can change the default namespace after it is created by executing:

marijan$ kubectl config set-context $(kubectl config current-context) --namespace=dev

To specify in which namespace a Pod should run, you can add the name of the namespace under the metadata section of the .yml file:

marijan$ cat pod-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

namespace: dev

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

Alternatively, you can specify the namespace when you are running the Pod :

marijan$ kubectl create -f pod-definition.yml --namespace=dev

Finally, to get the existing pods running in a different namespace, you should run the following command :

marijan$ kubectl get pods --namespace=dev

Resource Quotas

To limit the resources in a namespace, you have set up a resource quota.

Indeed, you can limit the number of pods, CPU usage, memory, and more. Here is an example of a .yml file for ResourceQuota :

marijan$ cat resourcequota-definition.yml

apiVersion: v1

kind: ResourceQuota

metadata:

name: Resource Quota

namespace: dev

spec:

hard:

pods: "10"

requests.cpu: "4"

requests.memory: 5Gi

limits.cpu: "10"

limits.memory: 10Gi

Limit Range

With the resource quota, you have limited the resources available in a namespace. Additionally, if you want to limit the consumption of resources or set a minimum requirement for resources for pods running the namespace, you can set up a limit range.

Indeed, with a limit range, you can set the resources for all pods running in the namespace without having to configure each one individually. Here is an example of a .yml file for a Limit Range :

marijan$ cat limiterange-definition.yml

apiVersion: v1

kind: LimitRange

metadata:

name: Limit Range

spec:

limits:

- default:

cpu: 500m

defaultRequest:

cpu: 500m

max:

cpu: "1"

min:

cpu: 100m

type: Container

The limit range for the CPU and the memory should be set up in different file.

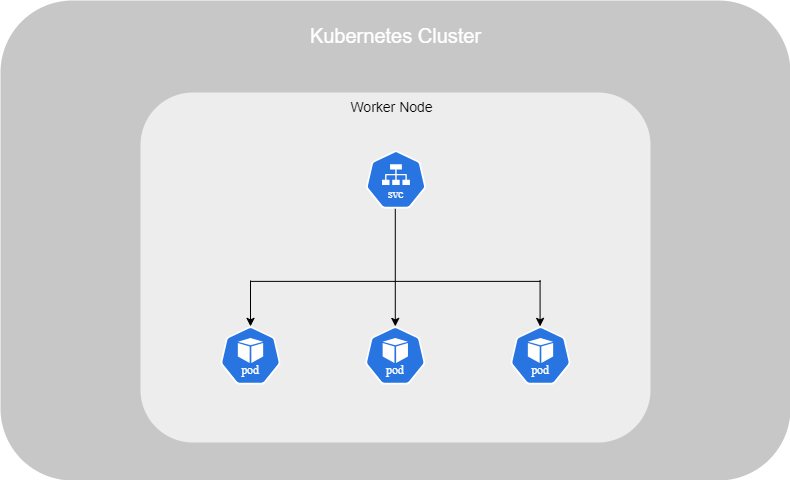

Services

On Kubernetes, a service is considered an object. One of the aims of a Service is that you don't have to modify a running application to make it compatible with the network configuration. Indeed, you can run the code in Pods to expose it to the network, allowing clients to interact with it.

To set up a Service, you have to implant on your .yml file :

marijan$ cat service-definition.yml

apiVersion: v1

kind: Service

metadata:

name: service-definition

spec:

selector:

app.kubernetes.io/name: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376

The .yml file includes the four main sections required for defining a Service object, each populated with specific information relevant to configuring Services.

Under the spec section, you specify the name of the Pods targeted and define the ports along with their respective settings.

As any object, you don't have to set up a .yml file to deploy a service. Indeed, you can deploy it by using the following command :

marijan$ kubectl expose service-definition --port=80 --protocole=TCP --target=9376

Pods

When you deploy an application in a container, Kubernetes automatically creates a Pod, and the application is encapsulated inside it.

Another benefit of using a Pod is that if you need to delete, for example, the web server, the associated database will be deleted as well.

You can set up a Pod through a .yml file :

marijan$ cat pod-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

The four main sections are present: apiVersion, kind, metadata, and spec. In this section, we will add the apiVersion and kind.

In the metadata, we'll include the name of our application, and the labels serve as descriptions. The app label indicates the application this pod is associated with, while the type label specifies the function of this pod.

Under the spec section, we've added containers with the name of our container and the corresponding image used. The dash "-" indicates the beginning of the first item in a list

With the kubectl command, you can list all of the Pods existing on the nodes, along with information such as their status, names, and more :

marijan$ kubectl get pods NAME READY STATUS RESTARTS AGE myapp-pod 1/1 Running 0 5m58s

To know on which node the pods is running, add the parameter -o wide at the end :

marijan$ kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-pod 0/1 ContainerCreating 0 4s <none> deb-vm-02 <none> <none>

To get more information about a specific pod, you can run the command describe followed by the type of object (pod) and his name.

marijan$ kubectl describe pod myapp-pod

To delete a running pod, execute the command delete followed by the type of object (pod) and his name.

marijan$ kubectl delete pod myapp-pod

To run a Pod without having to setting up a .yml file, you can execute the following command :

marijan$ kubectl run <image> --image=<image>

Multi-Container

You can run two containers in the same Pod. This is useful when, for example, you have a web server that needs a log agent. You can deploy both in the same Pod because they share the same lifecycle.

Lifecycle means that they are deployed and destroyed together. Additionally, they share the same network space, allowing them to refer to each other as localhost. They also have access to the same storage volume, so you don't need to establish volume sharing or services between them to facilitate communication.

You can set up a multi-container Pod by just adding a image and name under the container section :

marijan$ cat pod-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: webapp

labels:

app: webapp

spec:

containers:

- name: webapp

image: webapp

- name: log-agent

image: log-agent

As already know, with the kubectl command, you can list all of the Pods existing on the nodes. To know how many containers are running inside a pod, you can see it under the READY section :

marijan$ kubectl get pods NAME READY STATUS RESTARTS AGE webapp 2/2 Running 0 5m58s

Design Patterns

There are three different Design Patterns in Kubernetes for multi-container Pods.

- Sidecar Pattern : the sidecar is an additional container used to assist the primary container with tasks such as logging, monitoring, and security.

- Adapter Pattern : as the name suggests, the adapter container is used to adapt data. For example, if you have multiple logs in different formats that need to be sent to a central server in a common format, the adapter will handle the necessary data conversion.

- Ambassador Pattern : is an additional container that acts as a proxy. For instance, when deploying an application in different environments such as Dev, Test, or Prod, the ambassador container will automatically route requests to the correct database or service based on the deployment environment.

Init Containers

As explained above, the containers share the same lifecycle. If one is destroyed, the other will be destroyed as well. However, if your application needs to run a script or perform an initialisation task that doesn't require continuous running, you can use an Init Container.

An Init Container is a container that is deployed once, runs its script or performs its task, and then is destroyed, without affecting the primary container. It is essentially a one-time container designed for initialisation purposes.

If you have multiple Init Containers, they will run sequentially. If the first one hasn't finished, the second one won't start. Additionally, if an Init Container fails, kubelet will retry until it succeeds. However, if the container's restart policy is set to stop on failure, the entire Pod will stop running.

You can add initContainers section in your Pod .yml file :

marijan$ cat pod-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

initContainers:

- name: init-myservice

image: busybox

command: \['sh', '-c', 'git clone ;'\]

Design

Labels, Selectors & Annotations

To maintain a well-structured infrastructure and easily find information, you can assign labels to objects. This helps categorise services effectively, allowing you to group them by type, functionality, etc.

To do this, you need to add a label category under the metadata, followed by the defined category and name :

marijan$ cat pod-definition.yaml

apiVersion: v1

kind: Pod

metadata:

name: webserver

labels:

app: App1

function: Service

spec:

containers:

- name: webserver

image: nginx

Once defined, you can use a selector to retrieve the label you need by running the following command:

marijan$ kubectl get pods --selector function=Service NAME READY STATUS RESTARTS AGE webserver 1/1 Running 2 (7d ago) 78d

You can also define labels in resources such as ReplicaSet. In a ReplicaSet, labels are specified in three parts:

Under metadata for the ReplicaSet in general. Under selector, the matchLabels. Finally, under the metadata of the template.

marijan$ cat replicaset-definition.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp-ReplicaSet

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

selector:

matchLabels:

type: front-end

Finally, you have Annotations. You can add additional informations about the Pod such as the buildversion or contact informations for instance.

marijan$ cat replicaset-definition.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myapp-ReplicaSet

labels:

app: myapp

type: front-end

annotations:

buildversion: 1.10

spec:

template:

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

selector:

matchLabels:

type: front-end

Rollout and Versioning

Once a Deployment is created, a Rollout is automatically initiated. The Rollout also creates a new Revision. In the future, whenever the application is updated, a new Revision will be created again.

This process helps keep track of changes and allows us to roll back to a previous version if needed.

To create a new Rollout, you can run the following command, followed by the deployment name:

marijan$ kubectl rollout status deployment/<deployment> Waiting for deployment "myapp-wiki-deployment" rollout to finish: 1 out of 3 new replicas have been updated...

In order to check the history of a Rollout, run the following command :

# kubectl rollout history deployment <deployment> deployment.apps/myapp-wiki-deployment REVISION CHANGE-CAUSE 1 <none> 2 <none>

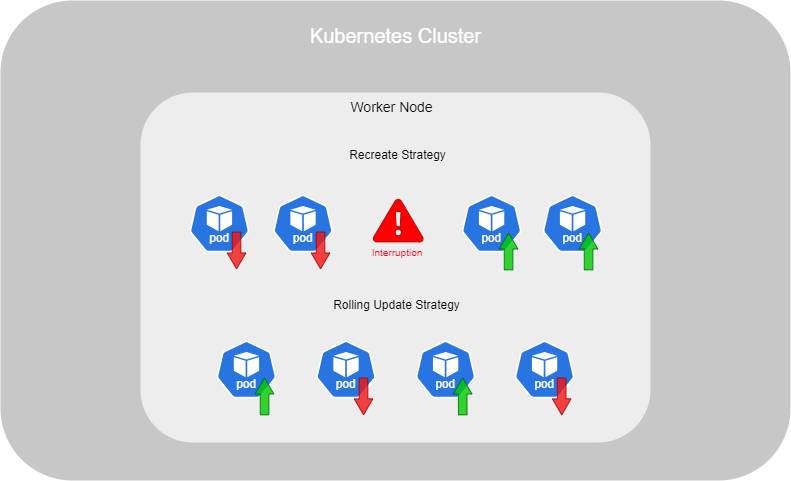

There are two available strategies. The first strategy, called Recreate, destroys all instances of the Deployment and then creates new ones. However, this strategy is not recommended because the application will be unavailable during this time.

The recommended and default strategy is called Rolling Update. It involves gradually replacing old instances with new ones, one at a time, ensuring that the service remains available throughout the process.

A new version of an application is defined by modifying its labels, annotations, or the image version being used. To apply these changes, you can run the following command, followed by the file name.

marijan$ kubectl apply -f <file.yml>

While it is possible to update the application by directly modifying the image or annotations through a command, this approach is not recommended. If the resource is later destroyed and recreated, it will revert to the previous version.

marijan$ kubectl set image deployment <deployment> \<container>=<image>:<version>

You can now run a Rollout to update the application. However, if an issue arises after the Rollout, you can undo it by executing the following command:

kubectl rollout undo deployment myapp-wiki-deployment deployment.apps/myapp-wiki-deployment rolled back

To set a different strategy for your object, you need to add the following section to the YAML configuration :

marijan$ cat deployment-definition.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

strategy:

type: Recreate

metadata:

name: myapp-pod

labels:

app: myapp

type: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 3

selector:

matchLabels:

type: front-end

Deployment Strategy

Additional Deployment Strategy over Rolling Update and Recreate.

Blue / Green

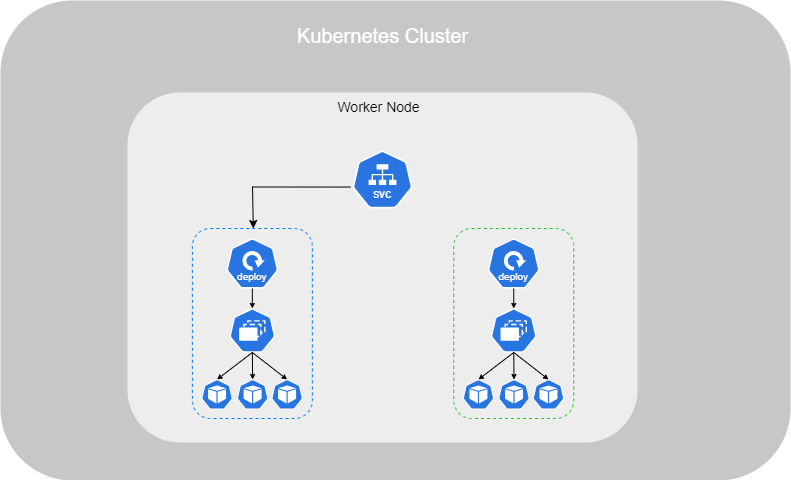

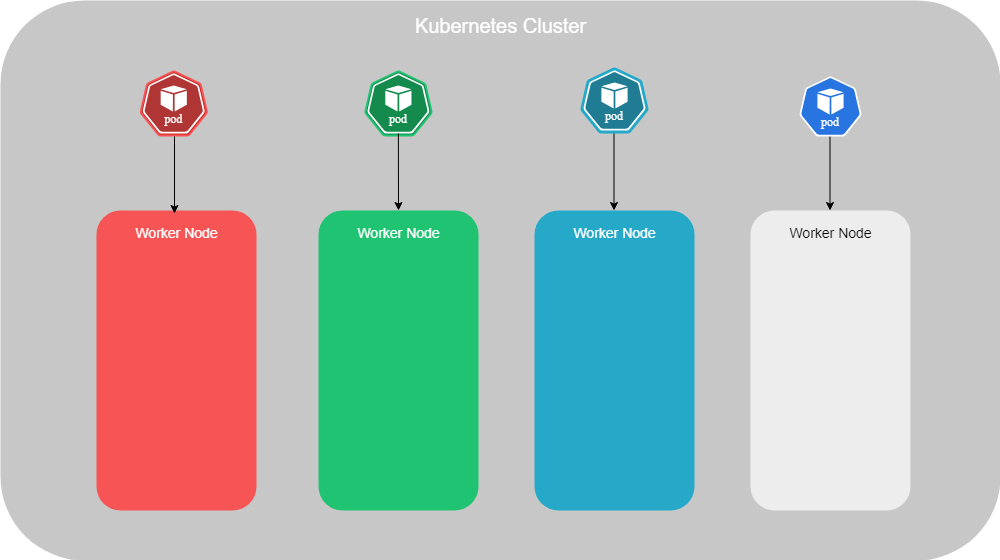

The Blue/Green Deployment Strategy involves maintaining two environments. The first environment, known as blue, runs the current version of the application, while the second environment, green, hosts the new version.

After testing is complete and the new version is ready for deployment, traffic can be switched to the green environment.

This process leverages the service's label selector. For example, the blue deployment could be labeled as version1, and the green as version2. Once all checks are successful and the green environment is ready, you simply update the service's label selector to point to the green deployment.

Here is the service configuration file :

marijan$ cat service-definition.yml

apiVersion: v1

kind: Service

metadata:

name: service-definition

spec:

selector:

version: v1

Then, here is the configuration file for the deployment :

marijan$ cat deployment-definition.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

version: v1

spec:

containers:

- name: nginx-container

image: nginx

replicas: 3

selector:

matchLabels:

version: v1

The benefit of this configuration is that you can deploy the new application for testing, perform all necessary tests, and once they are completed, simply change the selector on your service. This will automatically route all traffic to the new version.

Canary

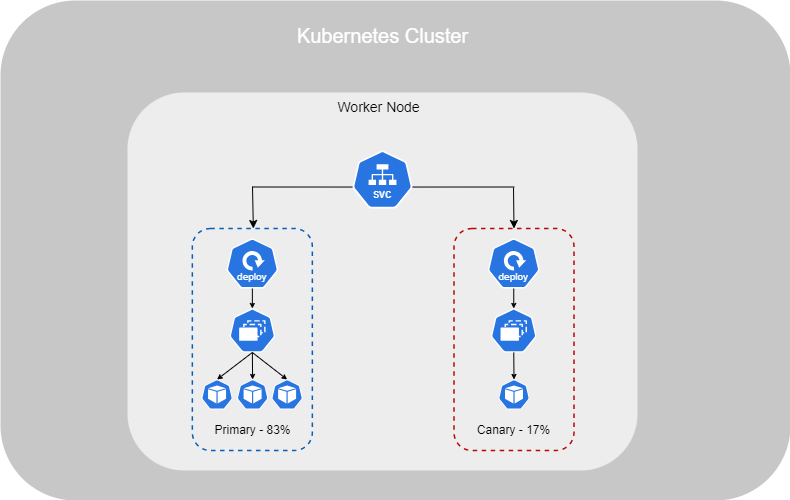

The Canary Deployment Strategy involves deploying only one Pod with the new version, while routing a small portion of traffic to it, with the rest remaining on the previous version. Once all tests have been completed and the new version is ready for deployment, all Pods are upgraded using a Rolling Update (or Recreate, if configured that way).

To perform a Canary Deployment, you need two separate deployments. The first is the primary deployment, where all Pods are running the older version. The canary deployment contains only one Pod running the new version. Similar to the Blue/Green strategy, a service defines which deployment should be used.

To deploy your service across two deployments, you need to set a new label for both. For instance, let's use front-end as the label. This allows traffic to be routed between the primary and canary deployments.

Here is an example of configuration, for service :

marijan$ cat service-definition.yml

apiVersion: v1

kind: Service

metadata:

name: service-definition

spec:

selector:

app: front-end

Here is the configuration file for the primary deployment :

marijan$ cat deployment-primary.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

version: v1

app: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 3

selector:

matchLabels:

app: front-end

Then, here is the configuration file for the canary deployment :

marijan$ cat deployment-primary.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deployment

labels:

app: myapp

type: front-end

spec:

template:

metadata:

name: myapp-pod

labels:

version: v2

app: front-end

spec:

containers:

- name: nginx-container

image: nginx

replicas: 1

selector:

matchLabels:

app: front-end

Jobs

There are different types of workloads, such as those that run for long periods (like web servers or applications) and Jobs, which run only for a short time (sending an email or generating a report).

You can create a Pod to perform a quick task and set the restartPolicy to Never, in order to avoid that your Pod restart multiple time the same task.

For a single task, this approach may work. However, if you have multiple tasks to complete, it is recommended to use Jobs. Here is an example of configuration :

marijan$ cat job-definition.yml

apiVersion: batch/v1

kind: Job

metadata:

name: mytask-job

spec:

parallelism: 3

completions: 3

template:

spec:

containers:

- name: mytask-job

image: ubuntu

command: ['expr', '3', '+', '2']

restartPolicy: Never

In this example, we have set the apiVersion to batch/v1 and the kind to Job. Under the spec section, we’ve defined completions as 3 (number of Jobs that have to be created), parallelism, how many should be create in the same time and within the template, we’ve specified the container details and the command we want to execute.

A Job is similar to a Pod, so you need to provide the same information as you would for a Pod (restartPolicy, containers, etc.). You can also use the same command structure for your Job as you would for a Pod :

To check the outcome of the process run by your Pod, you can view it in the logs :

marijan$ kubectl logs <jobs>

Cron Jobs

A CronJob is a Job where you can define a schedule to run it periodically, for example, once per week, day, or month. The configuration is similar to that of a Job, but with a CronJob, you must specify the kind as CronJob and define the schedule. Here is an example :

marijan$ cat cron-job-definition.yml

apiVersion: batch/v1

kind: CronJob

metadata:

name: reporting-cron-job

spec:

schedule: "* * * * *"

jobTemplate:

spec:

completions: 3

parallelism: 3

template:

spec:

containers:

- name: reporting-tool

image: reporting-tool

restartPolicy: Never

The representation below indicates what each part of the cron schedule represents :

┌───────────── minute (0 - 59) │ ┌───────────── hour (0 - 23) │ │ ┌───────────── day of the month (1 - 31) │ │ │ ┌───────────── month (1 - 12) │ │ │ │ ┌───────────── day of the week (0 - 6) (Sunday to Saturday) │ │ │ │ │ OR sun, mon, tue, wed, thu, fri, sat │ │ │ │ │ │ │ │ │ │ * * * * *

Once is created, you can see the information by getting the CronJob :

marijan$ kubectl get cronjobs NAME SCHEDULE TIMEZONE SUSPEND ACTIVE LAST SCHEDULE AGE reporting-cron-job * * * * * <none> False 0 <none> 7s

Service & Network

Services in Kubernetes are used to set up connectivity between components within the cluster, such as between back-end and front-end Pods, or to connect to external resources. It's consider as an object such as Deployment, Replicaset, etc.

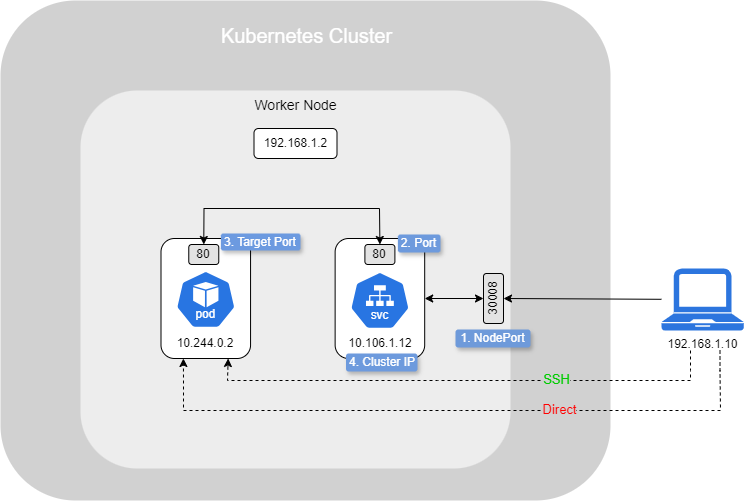

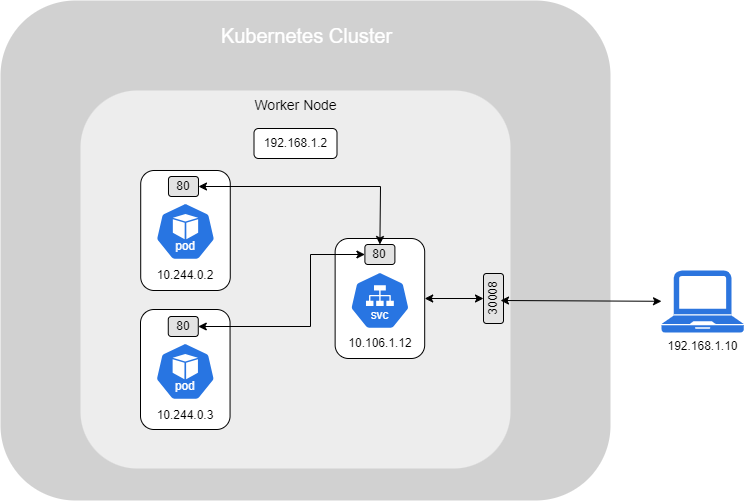

Below is a simple scenario where an external resource try to access a Pod :

By default, the user cannot access directely by trying to reach the IP of the Pod (10.244.0.2), even if he is on the same network as the Node (192.168.1.0). Indeed, the Pod is inside the Node. However, he can reach it by SSH through the Node as he has an access to the Pod network (10.244.0.0).

However, is not that we really want, instead of use SSH, setting up a Service :

- NodePort : To allow connections from outside, we will set up a NodePort, which is a port open on the Node and forwards traffic directly to the Service. A NodePort is, by default, assigned within the range 30,000 to 32,767. In this example, it is set to 30,008.

- Port : The request is then forwarded to the Service Port, which is the default port that receives traffic from NodePort 30008.

- TargetPort : After that, the request is forwarded to the Pod on the port where the actual service is running (in this case, port 80).

- Cluster IP : This is the IP address assigned to the Service, known as the Cluster IP, which is 10.106.1.12.

All of this information is from the perspective of the Service. Here is an example configuration related to the scenario above :

marijan$ cat service-definition.yaml

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30008

selector:

app: myapp

If you don't specify the targetPort, it will default to the same value as the defined port. Additionally, if you don't provide a nodePort, it will automatically assign a random port within the default range (30,000 to 32,767).

To ensure that traffic reaches the correct service on the TargetPort, it's crucial to set the selector correctly. For example, in this configuration, the selector is set to app: myapp, which means it will forward traffic to port 80 of any Pod labeled with app: myapp.

Once the service is created, you can see the informations by running the following command :

marijan$ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 130d my-service NodePort 10.97.123.89 <none> 80:30008/TCP 89s

Finally, if you have multiple Pods on a single Node that provide the same service and use the same label and port, the Service will act as a load balancer, distributing traffic between them. Similarly, if you have multiple Pods across multiple Nodes, the Service will still behave as a load balancer, routing traffic to any of the Pods that match the label and port configuration.

Cluster IP

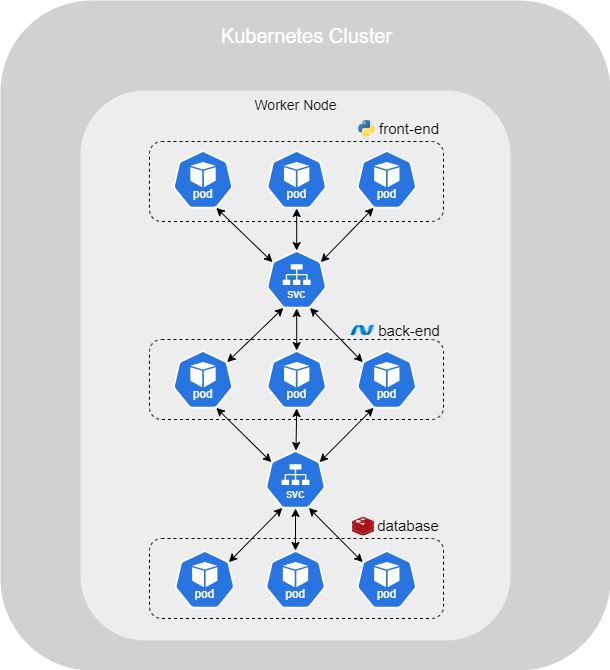

A full stack web application consists of different types of Pods hosting various parts of the application. These include the front-end, back-end, and a database, which is a separate entity connected to the back-end. You may have multiple Pods running, and they need to communicate with each other.

Even though Pods have IP addresses, these addresses are not static. Each time a Pod is destroyed and recreated, a new IP address is assigned. Therefore, you cannot rely on a static IP address for your front-end Pod to communicate with the back-end.

The solution is to use a Service, which groups all related Pods together and provides a single interface to access them, ensuring reliable communication between the components of your application.

As seen previously, to configure this type of setup, you need to define a selector, port, and targetPort for each service and the corresponding Pods. Below is an example of configuration for the back-end :

marijan$ cat service-backend-definition.yaml

apiVersion: v1

kind: Service

metadata:

name: back-end

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 80

selector:

app: myapp

type: back-end

Additionally, we have specified the type as ClusterIP. If you want to use this type of configuration, you don't need to explicitly define it, as it will automatically default to a ClusterIP service.

Network Policy

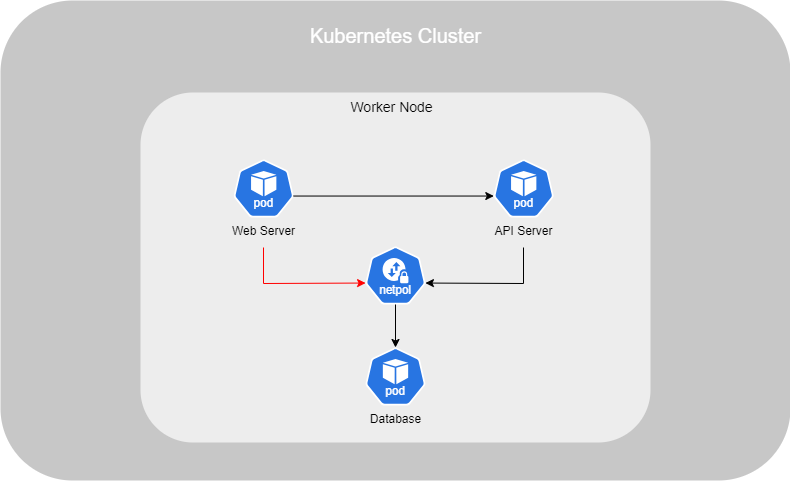

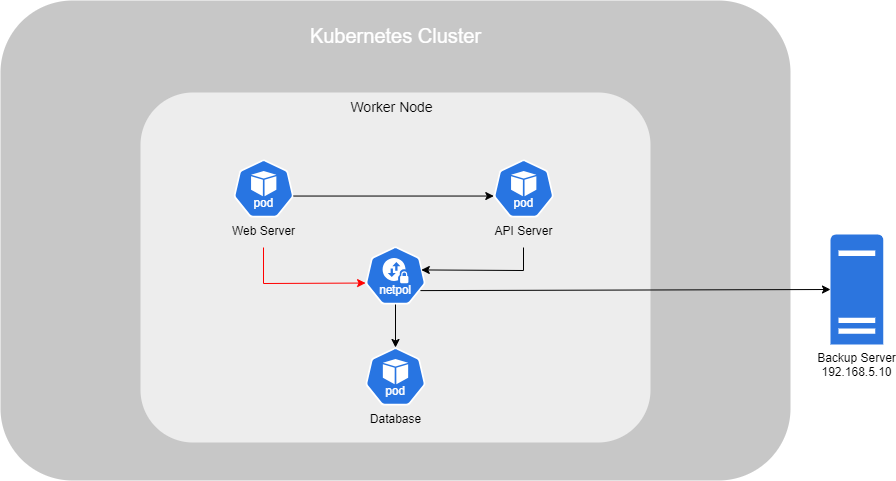

To understand how Network Policies work, it's important to understand the concepts of Ingress and Egress traffic.

For example, for a web server, Ingress traffic refers to incoming traffic from users, while Egress traffic refers to outgoing traffic, such as requests to an API server. These terms depend on the point of view. In this case, we are talking about the web server, but from the perspective of the API server, Ingress would be the traffic coming from the web server, and Egress would be the outgoing traffic to the database.

Then, by default, all Pods in a Kubernetes cluster can communicate with each other, regardless of which Node they are running on. It's as if they are all part of a virtual network where communication is unrestricted.

However, in a scenario where you have a web server, an API server, and a database, you may want to restrict access so that the database is only accessible from the API server and not from the web server. To achieve this, you would implement a Network Policy to enforce these access restrictions.

A Network Policy is an object similar to ReplicaSets, Deployments, etc., and it works with Selectors to define which Pods it is associated with, as well as to specify Ingress and Egress rules.

In the example above, Ingress traffic to the database from the web server is restricted. Only Ingress traffic from the API server is authorised. Below is the configuration of the Network Policy object :

marijan$ cat networkpolicy-definition.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: db-policy

spec:

PodSelector:

matchLabels:

role: db

policyTypes:

- Ingress

ingress

- from:

- podSelector:

matchLabels:

name: api-pod

ports:

- protocol: TCP

port 3306

The first podSelector is used to target the Pods with the label that designates their role as a database. Next, we define the policyTypes, which in this case is set to Ingress. The Ingress rules specify which Pods are allowed to access the selected database Pods based on the defined podSelector. Finally, we specify the open port that the selected Pods can access, ensuring that only the designated traffic is permitted.

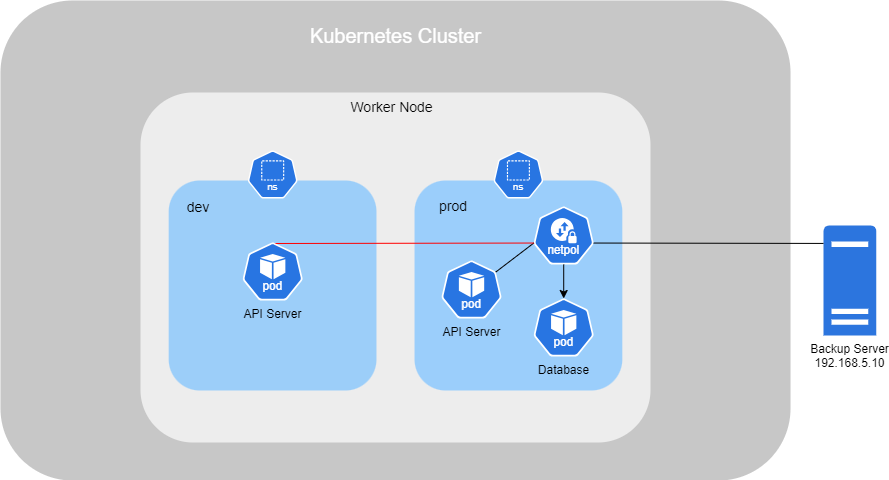

In this scenario bellow, we have an additional namespace that contains a Pod called API Server, and there is also a Backup Server trying to access the database.

In the configuration file, we will add a namespaceSelector that matches the prod namespace. Additionally, we will add an ipBlock with the IP of the Backup Server to allow it access.

marijan$ cat networkpolicy-definition.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: db-policy

spec:

PodSelector:

matchLabels:

role: db

policyTypes:

- Ingress

ingress

- from:

- podSelector:

matchLabels:

name: api-pod

namespaceSelector:

matchLabels:

name: prod

- ipBlock:

cidr: 192.168.5.10/32

ports:

- protocol: TCP

port 3306

Each dash ("-") indicates a separate rule, not linked to the others. If I had added a dash before namespaceSelector, it would mean that all Pods within the specified namespace would have access. However, considering the first scenario where the web server is also inside the prod namespace, it would not be a good idea to include the entire namespace, as we want to limit access to specific components like the API server.

If you need to allow Egress (outgoing requests), simply add "Egress" under the policyTypes section and include an egress section. Instead of using from, use to to define the destination you're trying to reach. In this case, the destination is the Backup Server.

marijan$ cat networkpolicy-definition.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: db-policy

spec:

PodSelector:

matchLabels:

role: db

policyTypes:

- Ingress

- Egress

ingress

- from:

- podSelector:

matchLabels:

name: api-pod

namespaceSelector:

matchLabels:

name: prod

- ipBlock:

cidr: 192.168.5.10/32

ports:

- protocol: TCP

port 3306

egress

- to:

- ipBlock:

cidr: 192.168.5.10/32

ports:

- protocol: TCP

port: 80

Production Solution

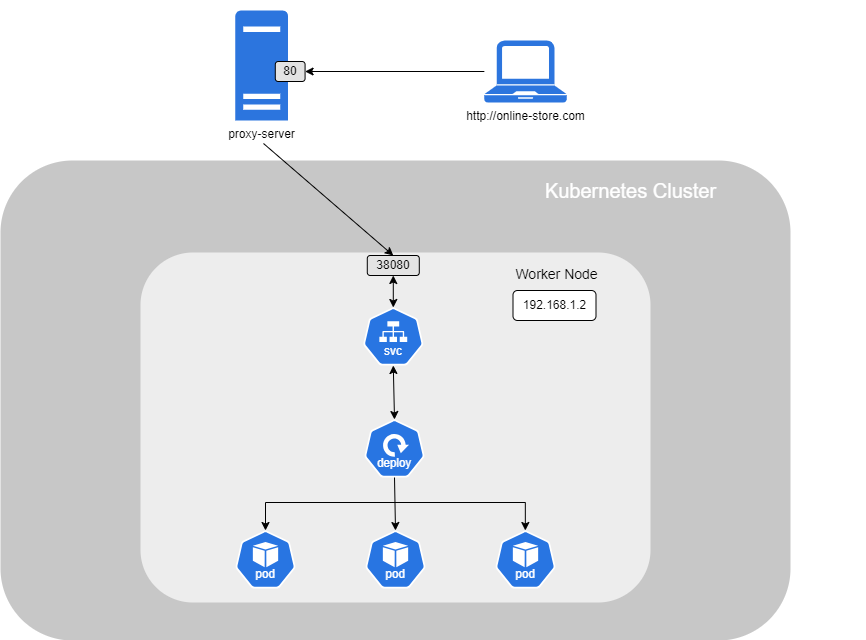

Imagine you have an online store and need to expose it to the internet using Kubernetes.

Self-Hosted Server

With your website hosted on a Kubernetes cluster, on a self-hosted server, you would need to create a Service that exposes your Deployment, which consists of Pods running behind it on a specific port. You would also set up a proxy server in front of your cluster to redirect traffic from port 80 (HTTP) to a port within the Kubernetes NodePort range (30,000–32,767), as defined in your Service configuration.

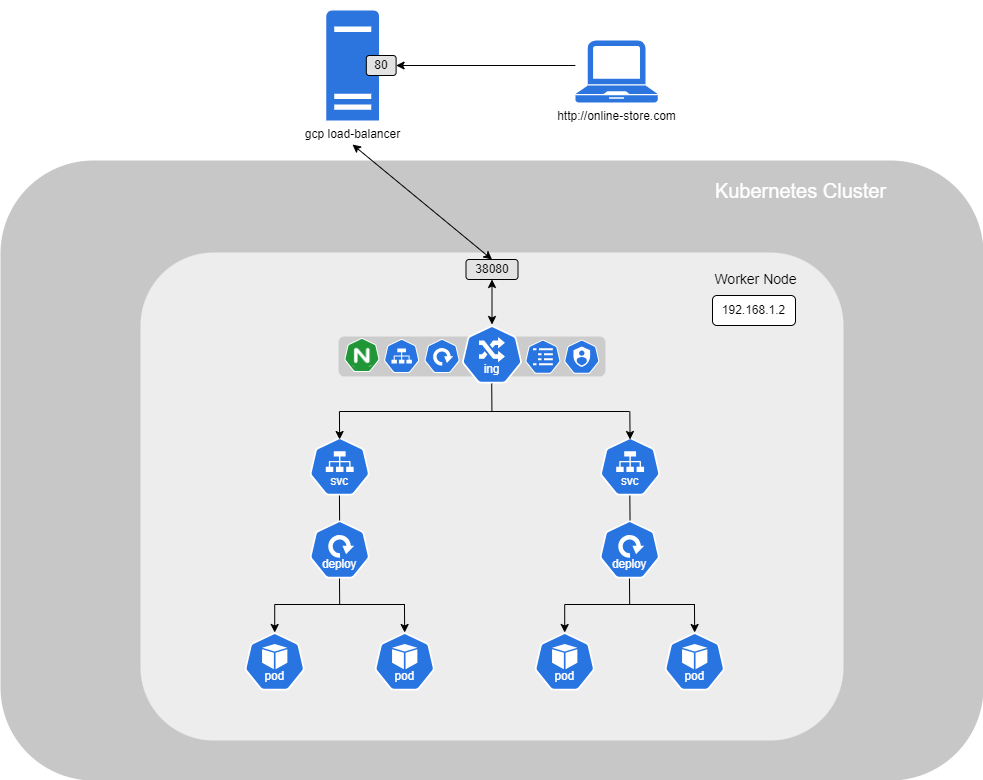

Public Cloud

If your website is hosted on a Kubernetes cluster using a public Cloud provider, the configuration will be slightly different. Instead of configuring your Service as a ClusterIP, you would configure it as a LoadBalancer. This will automatically provision a cloud provider's load balancer and link it directly to your Kubernetes Service.

Ingress

When you have many services running in the same Kubernetes cluster that need access to the internet and must be accessible via different URLs, you'll need to set up another load balancer and a proxy server to route traffic to the correct service. Additionally, you'll need to enable SSL for your application so that users can access your site securely via HTTPS. This setup requires a lot of configuration, and developers need to adapt their services accordingly, especially as they scale.

To simplify all of this and manage everything within the Kubernetes cluster, you can use an Ingress.

However, even with an Ingress configuration, you'll still need to set up a Service to allow external access. This could be a LoadBalancer (on a cloud platform) or a ClusterIP Service with a self-hosted proxy. The Ingress controller will handle all the load balancing, SSL authentication, and URL-based routing configurations, ensuring a streamlined process for managing traffic and security for your applications.

In order to do that, you have to setting up a Ingress controller and an Ingress Resources.

Controller

By default, when setting up your Kubernetes cluster, an Ingress Controller is not configured, so you must deploy one. There are many Controllers supported by Kubernetes, and in this example, we will use NGINX.

To install NGINX Ingress Controller, follow these steps :

1. First, you need to install Helm. Here are the steps for Debian :

marijan$ curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | sudo tee /usr/share/keyrings/helm.gpg > /dev/null marijan$ sudo apt-get install apt-transport-https --yes marijan$ echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list marijan$ apt-get update marijan$ apt-get install helm

2. Next, execute the following command to deploy the NGINX Ingress Controller :

marijan$ helm upgrade --install ingress-nginx ingress-nginx \ --repo https://kubernetes.github.io/ingress-nginx \ --namespace ingress-nginx --create-namespace

3. A new namespace will be created, along with the deployment and service. You can verify it by running :

marijan$ kubectl get all --namespace=ingress-nginx NAME READY STATUS RESTARTS AGE pod/ingress-nginx-controller-d49697d5f-74846 1/1 Running 0 35h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller LoadBalancer 10.98.157.94 <pending> 80:30551/TCP,443:32587/TCP 35h service/ingress-nginx-controller-admission ClusterIP 10.106.93.95 <none> 443/TCP 35h NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ingress-nginx-controller 1/1 1 1 35h NAME DESIRED CURRENT READY AGE replicaset.apps/ingress-nginx-controller-d49697d5f 1 1 1 35h

Resources

Now that you have set up the controller, you need to configure Ingress resources. An Ingress resource is a set of rules and configurations applied to the controller, determining how incoming traffic is routed to the backend services. For example, if you have two websites and want to route traffic from a specific domain to a particular Pod, you can configure the Ingress resource to handle this routing.

The creation of a resource is like any other object in Kubernetes, you have to defined it on a .yaml file. Here is an example :

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

creationTimestamp: "2024-10-20T22:07:44Z"

generation: 2

name: demo-localhost

namespace: default

resourceVersion: "71769"

uid: 60fd0964-f992-4ca8-93d3-a01d34faa2f8

spec:

ingressClassName: nginx

rules:

- host: marijan.testdomaine.ch

http:

paths:

- backend:

service:

name: demo

port:

number: 80

path: /

pathType: Prefix

If you have a website with two different sections, such as my-online-store.com, and you want to route traffic to /first and /second, here is an example of the configuration :

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

creationTimestamp: "2024-10-20T22:07:44Z"

generation: 2

name: demo-localhost

namespace: default

resourceVersion: "71769"

uid: 60fd0964-f992-4ca8-93d3-a01d34faa2f8

spec:

ingressClassName: nginx

rules:

- host: marijan.testdomaine.ch

http:

paths:

- path: /first

backend:

service:

name: demo-first

port:

number: 80

- path: /second

backend:

service:

name: demo-second

port:

number: 80

You can also create a new ressource directly by command :

marijan$ kubectl create ingress --rule="<host>/<path>=<service>:<port>"

But, don't forget to deploy your service, and expose it with the following command. This will automatically created a service :

marijan$ kubectl expose deployment demo

Annotations

You can add several options to your Ingress deployment under the annotations section. This is useful when you need to apply specific configurations.

For more information, refer to the documentation on your Ingress Controller's provider.

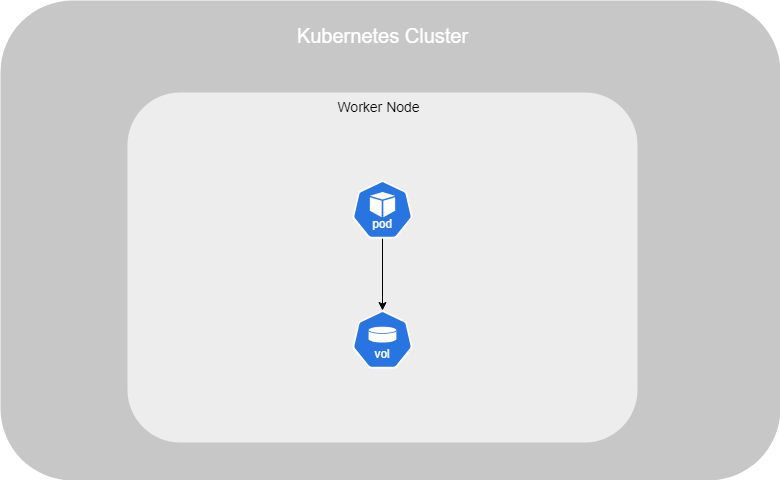

Volumes

In Kubernetes, just like in Docker, data stored within a Pod is ephemeral and is lost once the Pod is terminated. To ensure data persistence, you need to attach a volume to the container running within the Pod.

When a volume is attached and a Pod is created, any data stored in that volume remains even if the Pod is deleted, ensuring data persistence.

Here is an example of an attached volume :

marijan$ cat volume-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: random-number-generator

spec:

containers:

- name: alpine

image: alpine

command: ["/bin/sh","-c"]

args: ["shuf -i 0-100 -n 1 >> /opt/number.out;"]

volumeMounts

- mountPath: /opt

name: data-volume

volumes:

- name: data-volume

hostPath:

path: /data

type: Directory

In the example above, we have created a Pod using an Alpine image as the container. A command has been set up to create a file with a random number between 0 and 100, which is stored at /opt/number.out.

To make the data persistent, we added a volume, specifying both its name and the path where files created in the container will be stored.

Next, we defined the volumeMounts option, where we specified the mount path inside the container and referenced the volume by its name.

To summarise, the number.out file will be stored in the /opt directory within the container, which is actually backed by the /data directory of the attached volume. Even if the Pod is deleted, the file will persist on the host through this volume.

However, this solution works only if your application is running on a single Node. In a multi-node cluster, each Node has its own /data directory, and the volume will not know which specific Node's directory to use.

To overcome this limitation, you can use an external, replicated storage solution. Kubernetes supports various types of persistent storage options such as NFS (Network File System), GlusterFS, and others. Additionally, public cloud providers offer integrated solutions like AWS EBS (Elastic Block Store), Azure Disk, and Google Cloud Persistent Disks, which ensure data is available across multiple nodes.

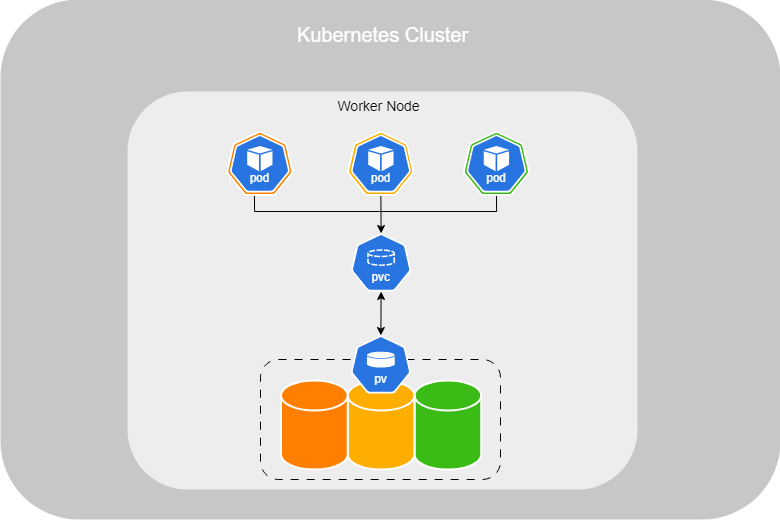

Persistent Volume

In a large environment with many users deploying many Pods, users often need to configure storage for their applications. To simplify storage management for administrators, you should deploy Persistent Volume (PV) objects, regardless of the replicated storage solution in use.

As an administrator, you can provide a pool of storage resources, and users can request a portion of it as needed through Persistent Volume Claims (PVCs). This approach abstracts the underlying storage and allows users to claim storage without needing to manage the specifics of the storage infrastructure.

Persistent Volumes enable efficient management of storage in dynamic environments, ensuring that storage resources are appropriately allocated and consumed.

marijan$ cat persistent-volume-definition.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-vol1

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 1Gi

hostPath:

path: /tmp/data

In the example above, we have created a Persistent Volume for users, configured in Read and Write mode, with 1 GiB of available storage. Kubernetes supports three different accessModes :

- ReadWriteOnce : The volume can be mounted as read-write by a single node.

- ReadOnlyMany : The volume can be mounted as read-only by multiple nodes.

- ReadWriteMany : The volume can be mounted as read-write by multiple nodes.

As mentioned earlier, this configuration is not recommended for production environments since it only supports a single node. For production use, you should set up an integrated storage solution that supports multi-node environments and high availability, such as a cloud storage service or a distributed file system.

Persistent Volume Claims

Now that the Persistent Volume (PV) is set up, you can create your Persistent Volume Claims (PVC). Kubernetes will automatically bind the PVC to a suitable PV by checking factors such as sufficient capacity, access modes, volume modes, and storage class.

If there are multiple matching PVs, Kubernetes will select one at random. However, you can use labels and selectors to explicitly bind a PVC to a specific PV.

If no PV matches the PVC’s requirements, the PVC will remain in a pending state.

Here is an example of a configuration, with the exmaple of PV above :

marijan$ cat persistent-volume-claim-definition.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: myclaim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 500Mi

Now that both are set up, you can observe the following behavior :

kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE myclaim Bound pv-vol1 1Gi RWO <unset> 3s

If the PVC is deleted, the default behavior is for the PV to be set to Retain, meaning it will continue to exist with the same data and remain available for scheduling. However, you can modify this behavior by adding persistentVolumeReclaimPolicy to your PV configuration :

- Delete : the volume will be automatically deleted once the PVC is deleted ;

- Recycle : data into the volume will be deleted, but the PV will be ready to be schedule again.

You can integrate PVC configuration directly into the configuration file of your Pod, Deployment or ReplicaSet :

marijan$ cat pod-integrate-pvc-definition.yml

apiVersion: v1

kind: Pod

metadata:

name: random-number-generator

spec:

containers:

- name: alpine

image: alpine

command: ["/bin/sh","-c"]

args: ["shuf -i 0-100 -n 1 >> /opt/number.out;"]

volumeMounts

- mountPath: /opt

name: data-volume

volumes:

- name: data-volume

hostPath:

persistentVolumeClaim:

claimName: myclaim

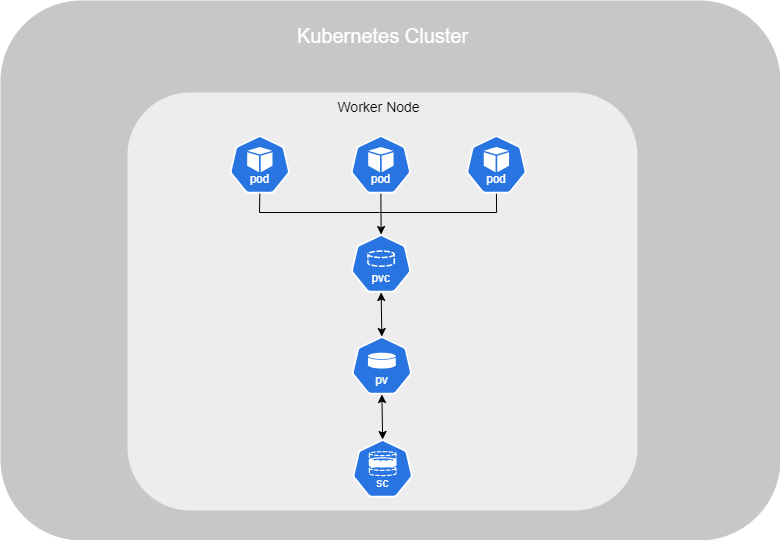

Storage Class

By default, when using persistent storage options such as those provided by public cloud providers, you must manually create a disk and then create a PV linked to it. This method is called static provisioning.

However, there is also a method called dynamic provisioning, which works with a StorageClass (SC). StorageClasses are used to automatically provision storage in the cloud and attach it to the Pod once the PVC is created.

Here is an example of configuration of a Storage Class using GCE (Google Cloud) :

marijan$ cat storage-class-definition.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: google-storage

provisioner: kubernetes.io/gce-pd

Then, you have to add the parameter storageClassName on your PVC configuration file :

marijan$ cat persistent-volume-claim-definition.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: myclaim

spec:

accessModes:

- ReadWriteOnce

storageClassName: google-storage

resources:

requests:

storage: 500Mi

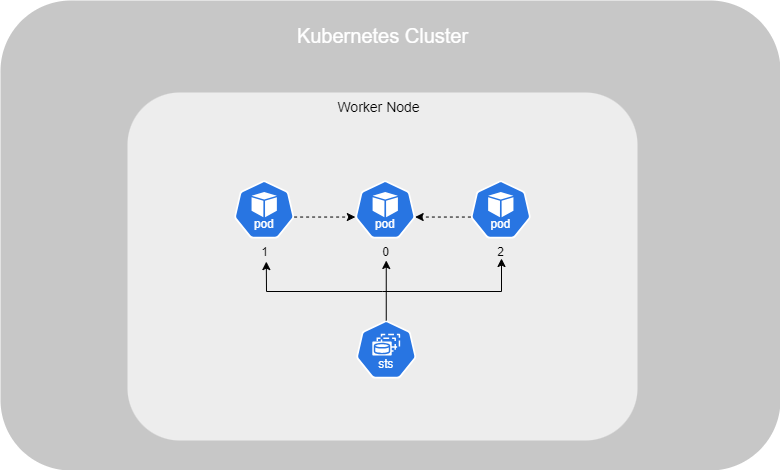

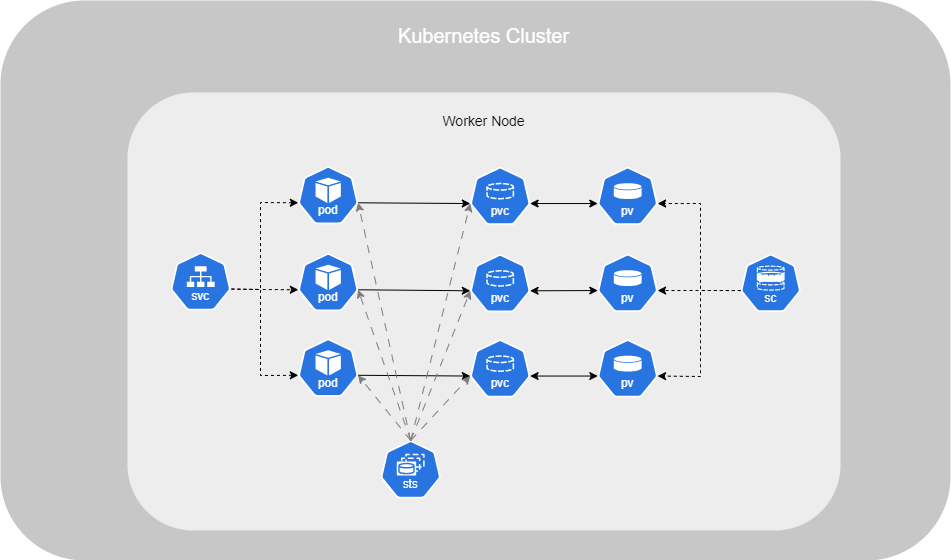

Stateful Sets

When deploying your application, such as a database, and you require high availability, you'll typically deploy multiple Pods :

- First, your deployment will automatically create three Pods: one master and two slaves.

- The data from the master will be cloned to slave-1.

- Continuous replication will be enabled from the master to slave-1.

- Once slave-1 is ready, the data will be cloned from slave-1 to slave-2.

- Continuous replication will then be enabled from the master to slave-2.

- Finally, the master’s address will be configured on both slaves.

However, by default, Kubernetes does not ensure that all Pods have access to the same data when using a Deployment. It's due to of that an Deployment doesn't defined that which Pod is consider as a master.

To achieve this, you need to set up StatefulSets. StatefulSets assign a unique number to each Pod, with the master typically labeled as 0, and slaves labeled as 1, 2, and so on. You can then define the master’s name in your application configuration, allowing it to be used as a reference by the slaves.

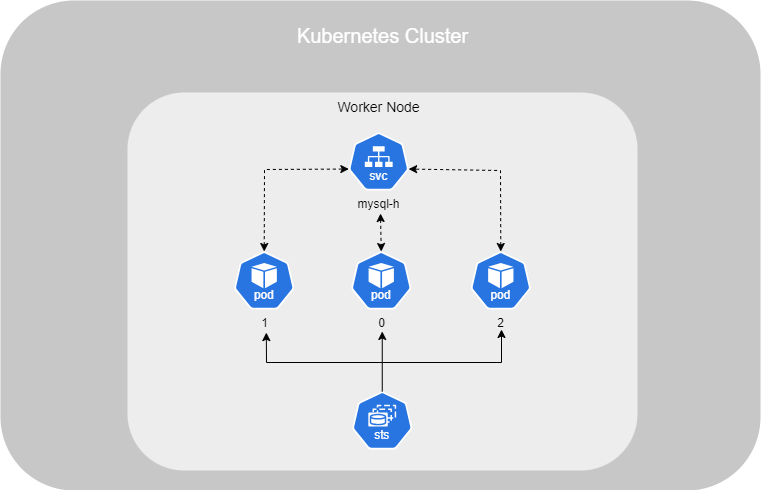

To deploy it, the configuration is similar to a Deployment, but you also need to add a serviceName. This serviceName defines the base name that will be used along with the Pod numbers :

marijan$ cat statefulset-definition.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

labels:

app: mysql

spec:

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql

replicas: 3

selector:

matchLabels:

app: mysq

serviceName: mysql-h

With CoreDNS, the DNS service within a Kubernetes cluster, it can now resolve the following Pods :

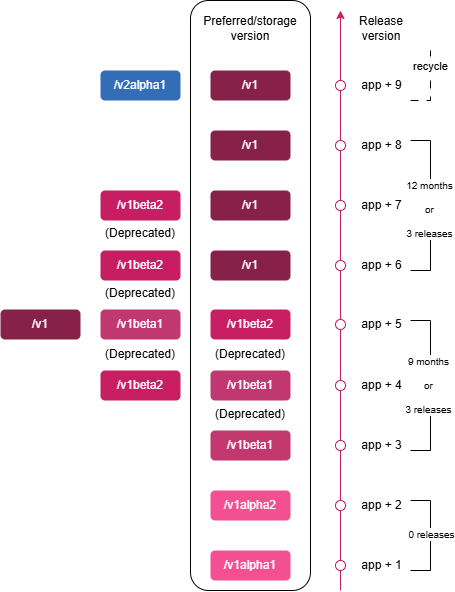

mysql-0.mysql-h.default.svc.cluster.local mysql-1.mysql-h.default.svc.cluster.local mysql-2.mysql-h.default.svc.cluster.local